40 The Structural Perspective

The structural perspective analyzes the association, arrangement, proximity, or connection between resources without primary concern for their meaning or the origin of these relationships.[1]

We take a structural perspective when we define a family as “a collection of people” or when we say that a particular family like the Simpsons has five members. Sometimes all we know is that two resources are connected, as when we see a highlighted word or phrase that is pointing from the current web page to another. At other times we might know more about the reasons for the relationships within a set of resources, but we still focus on their structure, essentially merging or blurring all of the reasons for the associations into a single generic notion that the resources are connected.

Travers and Milgram conducted a now-famous study in the 1960s involving the delivery of written messages between people in the midwestern and eastern United States. If a person did not know the intended recipient, he was instructed to send the message to someone that he thought might know him. The study demonstrated what Travers and Milgram called the “small world problem,” in which any two arbitrarily selected people were separated by an average of fewer than six links.

It is now common to analyze the number of “degrees of separation” between any pair of resources. For example, Markoff and Sengupta describe a 2011 study using Facebook data that computed the average “degree of separation” of any two people in the Facebook world to be 4.74.[2]

See http://oracleofbacon.org/ for a web-based demonstration of “Kevin Bacon Numbers,” which measure the average degrees of separation among more than 2.6 million actors in more than 1.9 million movies. Its name reflects the parlor game “Six Degrees of Kevin Bacon,” a pun on “six degrees of separation” that is often associated with Travers and Milgram’s work; the game relies on the remarkable variety of Bacon’s roles, and hence the number of fellow actors in his movies (two actors in the same movie have one degree of separation). Bacon’s Bacon Number is 2.994, but it turns out that more than 300 actors are closer to the center of the movie universe than Bacon. Try some famous actors and see if their Bacon Numbers are greater or smaller than Bacon’s. (Hint: older actors have been in more movies.)

Many types of resources have internal structure in addition to their structural relationships with other resources. Of course, we have to remember (as we discussed in “Resource Identity”) that we often face arbitrary choices about the abstraction and granularity with which we describe the parts that make up a resource and whether some combination of resource should also be identified as a resource. This is not easy when you are analyzing the structure of a car with its thousands of parts, and it is ever harder with information resources where there are many more ways to define parts and wholes. However, an advantage for information resources is that their internal structural descriptions are usually highly “computable,” something we consider in depth in Interactions with Resources.

Intentional, Implicit, and Explicit Structure

In the discipline of organizing we emphasize “intentional structure” created by people or by computational processes rather than accidental or naturally-occurring structures created by physical and geological processes. We acknowledged in “The Concept of “Intentional Arrangement”” that there is information in the piles of debris left after a tornado or tsunami and in the strata of the Grand Canyon. These structural patterns might be of interest to meteorologists, geologists, or others but because they were not created by an identifiable agent following one or more organizing principles, they are not our primary focus.

Find a map of the states (or provinces or other divisions) in your country. You probably think of some set of these as members of a collection. Other than their literal arrangement (e.g., “x is next to y, y is east of z”), how could you describe their relationships to each other within the collection? Are these relationships based on natural or unintentional properties or intentional ones? Example: in the United States, California, Oregon, and Washington are considered the “West Coast” and the Pacific Ocean determines their western boundaries. Some of the borders between the states are natural, determined by rivers, and other borders are more intentional and arbitrary.

Some organizing principles impose very little structure. For a small collection of resources, co-locating them or arranging them near each other might be sufficient organization. We can impose two- or three-dimensional coordinate systems on this “implicit structure” and explicitly describe the location of a resource as precisely as we want, but we more naturally describe the structure of resource locations in relative terms. In English we have many ways to describe the structural relationship of one resource to another: “in,” “on,” “under,” “behind,” “above,” “below,” “near,” “to the right of,” “to the left of,” “next to,” and so on. Sometimes several resources are arranged or appear to be arranged in a sequence or order and we can use positional descriptions of structure: a late 1990s TV show described the planet Earth as the “third rock from the Sun.”[3]

We pay most attention to intentional structures that are explicitly represented within and between resources because they embody the design or authoring choices about how much implicit or latent structure will be made explicit. Structures that can be reliably extracted by algorithms become especially important for very large collections of resources whose scope and scale defy structural analysis by people.

Structural Relationships within a Resource

We almost always think of human and other animate resources as unitary entities. Likewise, many physical resources like paintings, sculptures, and manufactured goods have a material integrity that makes us usually consider them as indivisible. For an information resource, however, it is almost always the case that it has or might have had some internal structure or sub-division of its constituent data elements.

In fact, since all computer files are merely encodings of bits, bytes, characters and strings, all digital resources exhibit some internal structure, even if that structure is only discernible by software agents. Fortunately, the once inscrutable internal formats of word processing files are now much more interpretable after they were replaced by XML in the last decade.

When an author writes a document, he or she gives it some internal organization with its title, section headings, typographic conventions, page numbers, and other mechanisms that identify its parts and their significance or relationship to each other. The lowest level of this structural hierarchy, usually the paragraph, contains the text content of the document. Sometimes the author finds it useful to identify types of content like glossary terms or cross-references within the paragraph text. Document models that mix structural description with content “nuggets” in the text are said to contain mixed content.

In data-intensive or transactional domains, document instances tend to be homogeneous because they are produced by or for automated processes, and their information components will appear predictably in the same structural relationships with each other. These structures typically form a hierarchy expressed in an XML schema or word processing style template. XML documents describe their component parts using content-oriented elements like <ITEM>, <NAME>, and <ADDRESS>, that are themselves often aggregate structures or containers for more granular elements. The structures of resources maintained in databases are typically less hierarchical, but the structures are precisely captured in database schemas.

The internal parts of XML documents can be described, found and selected using the XPath language, which defines the structures and patterns used by XML forms, queries, and transformations. The key idea used by XPath is that the structure of XML documents is a tree of information items called nodes, whose locations are described in terms of the relationships between nodes. The relationships built into XPath, which it calls axes, include self, child, parent, following, and preceding, making it very easy to specify a structure-based query like “find all sections in Chapter 1 through Chapter 5 that have at least two levels of subsections.”[6]

In addition, tools like Schematron take advantage of XPath’s structural descriptions to test assertions about a document’s structure and content. For example, a common editorial constraint might be that a numbered list must have at least three items.[7]

In more qualitative, less information-intensive and more experience-intensive domains, we move toward the narrative end of the Document Type Spectrum, and document instances become more heterogeneous because they are produced by and for people. (See the sidebar, The Document Type Spectrum in “Resource Domain”.) The information conveyed in the documents is conceptual or thematic rather than transactional, and the structural relationships between document parts are much weaker. Instead of precise structure and content rules, there is usually just a shallow hierarchy marked up with Word processing or HTML tags like <HEAD>, <H1>, <H2>, and <LIST>.

The internal structural hierarchy in a resource is often extracted and made into a separate and familiar description resource called the “table of contents” to support finding and navigation interactions with the primary resource. In a printed media context, any given content resource is likely to only be presented once, and its page number is provided in the table of contents to allow the reader to locate the chapter, section or appendix in question. In a hypertext media context, a given resource may be a chapter in one book while being an appendix in another. Some tables of contents are created as a static structural description, but others are dynamically generated from the internal structures whenever the resource is accessed. In addition, other types of entry points can be generated from the names or descriptions of content components, like selectable lists of tables, figures, maps, or code examples.

Identifying the components and their structural relationships in documents is easier when they follow consistent rules for structure (e.g., every non-text component must have a title and caption) and presentation (e.g., hypertext links in web pages are underlined and change cursor shapes when they are “moused over”) that reinforce the distinctions between types of information components. Structural and presentation features are often ordered on some dimension (e.g., type size, line width, amount of white space) and used in a correlated manner to indicate the importance of a content component.[9]

Many indexing algorithms treat documents as “bags of words” to compute statistics about the frequency and distribution of the words they contain while ignoring all semantics and structure. In Interactions with Resources, we contrast this approach with algorithms that use internal structural descriptions to retrieve more specific parts of documents.

Structural Relationships between Resources

Many types of resources have “structural relationships” that interconnect them. Web pages are almost always linked to other pages. Sometimes the links among a set of pages remain mostly within those pages, as they are in an e-commerce catalog site. More often, however, links connect to pages in other sites, creating a link network that cuts across and obscures the boundaries between sites.

The links between documents can be analyzed to infer connections between the authors of the documents. Using the pattern of links between documents to understand the structure of knowledge and of the intellectual community that creates it is not a new idea, but it has been energized as more of the information we exchange with other people is on the web or otherwise in digital formats. An important function in Google’s search engine is the page rank algorithm that calculates the relevance of a page in part using the number of links that point to it while giving greater weight to pages that are themselves linked to often.[10]

Web-based social networks enable people to express their connections with other people directly, bypassing the need to infer the connections from links in documents or other communications.

Hypertext Links

The concept of read-only or follow-only structures that connect one document to another is usually attributed to Vannevar Bush in his seminal 1945 essay titled As We May Think. Bush called it associative indexing, defined as “a provision whereby any item may be caused at will to select immediately and automatically another.”[11]

The “item” connected in this way was for Bush most often a book or a scientific article. However, the anchor and destination of a hypertext link can be a resource of any granularity, ranging from a single point or character, a paragraph, a document, or any part of the resource to which the ends of link are connected. The anchor and destination of a web link are its structural specification, but we often need to consider links from other perspectives. (See the sidebar, Perspectives on Hypertext Links).

Theodor Holm Nelson, in a book intriguingly titled Literary Machines, renamed associative indexing as hypertext decades later, expanding the idea to make it a writing style as well as a reading style.[12]

Nelson urged writers to use hypertext to create non-sequential narratives that gave choices to readers, using a novel technique for which he coined the term transclusion.[13]

At about the same time, and without knowing about Nelson’s work, Douglas Engelbart’s Augmenting the Human Intellect, described a future world in which professionals equipped with interactive computer displays utilize an information space consisting of a cross-linked resources.[14]

In the 1960s, when computers lacked graphic displays and were primarily employed to solve complex mathematical and scientific problems that might take minutes, hours or even days to complete, Nelson’s and Engelbart’s visions of hypertext-based personal computing may have seemed far-fetched. In spite of this, by 1968, Engelbart and his team demonstrated human computer interface including the mouse, hypertext, and interactive media, along with a set of guiding principles.[15]

Hypertext links are now familiar structural mechanisms in information applications because of the World Wide Web, proposed in 1989 by Tim Berners-Lee and Robert Cailliau.[22]

They invented the methods for encoding and following hypertext links using the now popular HyperText Markup Language(HTML).[23]

The resources connected by HTML’s hypertext links are not limited to text or documents. Selecting a hypertext link can invoke a connected resource that might be a picture, video, or interactive application.[24]

By 1993, personal computers, with a graphic display, speakers and a mouse pointer, had become ubiquitous. NCSA Mosaic is widely credited with popularizing the World Wide Web and HTML in 1993, by introducing inline graphics, audio and video media, rather than having to link to media segments in a separate window.[25]

The ability to transclude images and other media would transform the World Wide Web from a text-only viewer with links to a “networked landscape” with hypertext signposts to guide the way. On 12 November 1993, the first full release of NCSA Mosaic on the world’s three most popular operating systems (X Windows, Microsoft Windows, and Apple Macintosh) enabled the general public to access the network with a graphical browser.[26]

Since browsers made them familiar, hypertext links have been used in other computing applications as structure and navigation mechanisms.

Analyzing Link Structures

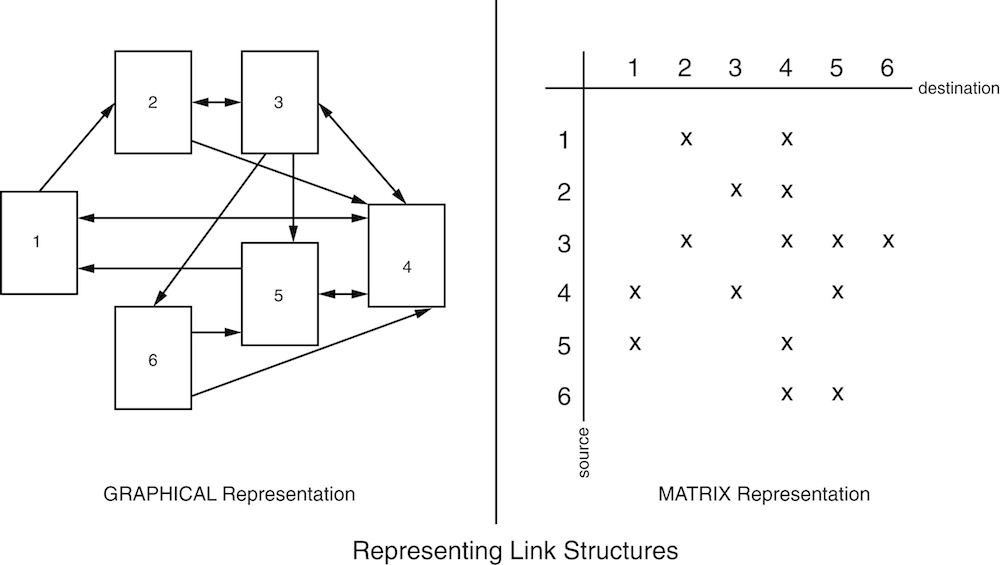

We can portray a set of links between resources graphically as a pattern of boxes and links. Because a link connection from one resource to another need not imply a link in the opposite direction, we distinguish one-way links from explicitly bi-directional ones.

A graphical representation of link structure is shown on the left panel of figure Figure: Representing Link Structures.. For a small network of links, a diagram like this one makes it easy to see that some resources have more incoming or outgoing links than other resources. However, for most purposes we leave the analysis of link structures to computer programs, and there it is much better to represent the link structures more abstractly in matrix form. In this matrix the resource identifiers on the row and column heads represent the source and destination of the link. This is a full matrix because not all of the links are symmetric; a link from resource 1 to resource 2 does not imply one from 2 to 1.

The structure of links between web resources can be represented graphically or in a matrix. The matrix representation is a more abstract one that can be analyzed by computers.

A matrix representation of the same link structure is shown on the right panel of Figure: Representing Link Structures.. This representation models the network as a directed graph in which the resources are the vertices and the relationships are the edges that connect them. We now can apply graph algorithms to determine many useful properties. A very important property is reachability, the “can you get there from here” property.[27]

Other useful properties include the average number of incoming or outgoing links, the average distance between any two resources, and the shortest path between them.

Bibliometrics, Shepardizing, Altmetrics, and Social Network Analysis

Information scientists began studying the structure of scientific citation, now called bibliometrics, nearly a century ago to identify influential scientists and publications. This analysis of the flow of ideas through publications can identify “invisible colleges” of scientists who rely on each other’s research, and recognize the emergence of new scientific disciplines or research areas. Universities use bibliometrics to evaluate professors for promotion and tenure, and libraries use it to select resources for their collections.[28]

The expression of citation relationships between documents is especially nuanced in legal contexts, where the use of legal cases as precedents makes it essential to distinguish precisely where a new ruling lies on the relational continuum between “Following” and “Overruling” with respect to a case it cites. The analysis of legal citations to determine whether a cited case is still good law is called Shepardizing because lists of cases annotated in this way were first published in the late 1800s by Frank Shepard, a salesman for a legal publishing company.[29]

The links pointing to a web page might be thought of as citations to it, so it is tempting to make the analogy to consider Shepardizing the web. But unlike legal rulings, web pages aren’t always persistent, and only courts have the authority to determine the value of cited cases as precedents, so Shepard-like metrics for web pages would be tricky to calculate and unreliable.

Nevertheless, the web’s importance as a publishing and communication medium is undeniable, and many scholars, especially younger ones, now contribute to their fields by blogging, Tweeting, leaving comments on online publications, writing Wikipedia articles, giving MOOC lectures, and uploading papers, code, and datasets to open access repositories. Because the traditional bibliometrics pay no attention to this body of work, alternative metrics or “altmetrics” have been proposed to count these new venues for scholarly influence.[30]

Facebook’s valuation is based on its ability to exploit the structure of a person’s social network to personalize advertisements for people and “friends” to whom they are connected. Many computer science researchers are working to determine the important characteristics of people and relationships that best identify the people whose activities or messages influence others to spend money.[31]

-

Of the five perspectives on relationships in this chapter, the structural one comes closest to the meaning of “relation” in mathematics and computer science, where a relation is a set of ordered elements (“tuples”) of equal degree (“Degree”). A binary relation is a set of element pairs, a ternary relation is a set of 3-tuples, and so on. The elements in each tuple are “related” but they do not need to have any “significant association” or “relationship” among them.

-

See (Travers and Milgram 1969) and (Markoff and Sengupta 2011).

-

This seems like an homage to Jimi Hendrix based on the title from a 1967 song, Third Stone from the Sun

http://en.wikipedia.org/wiki/Third_Stone_from_the_Sun. -

The subfield of natural language processing called “named entity recognition” has as its goal the creation of mixed content by identifying people, companies, organizations, dates, trademarks, stock symbols, and so on in unstructured text.

-

Text Encoding Initiative 13. Names, Dates, People, and Places.

-

See (Holman 2001) or (Tidwell 2008).

-

See (van der Vlist 2007) and schematron.org for overviews. See (Hamilton and Wood 2012) for a detailed case study.

-

These layout and typographic conventions are well known to graphic designers (Williams 2012) but are also fodder for more academic treatment in studies of visual language or semiotics (Crow 2010).

-

(Page, Brin, Motwani, and Winograd 1999) describes Page Rank when its inventors were computer science graduate students at Stanford. It is not a coincidence that the technique shares a name with one of its inventors, Google co-founder and CEO Larry Page. (Langville and Meyer 2012) is an excellent textbook. The ultimate authority about how page rank works is Google; see

https://www.google.com/insidesearch/howsearchworks/thestory/. -

(Bush 1945). “Wholly new forms of encyclopedias will appear, ready made with a mesh of associative trails running through them...” See

http://www.theatlantic.com/magazine/archive/1945/07/as-we-may-think/303881/. -

See Computer Lib/Dream Machines (Nelson 1981) for an early example of Nelson’s non-liner book style.

-

(Engelbart 1963) Douglas Engelbart credits Bush’s As We May Think article as his direct inspiration. Engelbart was in the US Navy, living in a hut in the South Pacific during the last stages of WWII when he read The Atlantic monthly magazine in which Bush’s article was published.

-

Doug Engelbart’s demonstration has been called the “Mother of All Demos” and can be seen in its entirety at

http://sloan.stanford.edu/MouseSite/1968Demo.html. -

See (Lorch 1989), (Mann and Thomson 1988). For example, an author might use “See” as in “See (Glushko et al. 2013)” when referring to this chapter if it is consistent with his point of view. On the other hand, that same author could use “but” as a contrasting citation signal, writing “But see (Glushko et al. 2013)” to express the relationship that the chapter disagrees with him.

-

Before the web, most hypertexts implementations were in stand-alone applications like CD-ROM encyclopedias or in personal information management systems that used “cards” or “notes” as metaphors for the information units that were linked together, typically using rich taxonomies of link types. See (Conklin 1987), (Conklin and Begeman 1988), and (DeRose 1989).

-

Many of the pre-web hypertext designs of the 1980s and 1990s allowed for n-ary links. The Dexter hypertext reference model (Halasz and Schwartz 1994) elegantly describes the typical architectures. However, there is some ambiguity in use of the term binary in hypertext link architectures. One-to-one vs. one-to-many is a cardinality distinction, and some people reserve binary to discussion about degree.

-

Most designers use a variety of visual cues and conventions to distinguish hyperlinks (e.g., plain hyperlink, button, selectable menu, etc.) so that users can anticipate how they work and what they mean. A recent counter-trend called “flat design” —exemplified most notably by the user interfaces of Windows 8 and iOS 7— argues for a minimalist style with less variety in typography, color, and shading. Flat designs are easier to adapt across multiple devices, but convey less information.

-

Most web links are very simple in structure. The anchor text in the linking document is wrapped in

<A>and</A>tags, with anHREF(hypertext reference) attribute that contains the URI of the link destination if it is in another page, or a reference to an ID attribute if the link is to a different part of the same page. HTML also has a<LINK>tag, which, along with<A>haveREL(relationship) andREV(reverse relationship) attributes that enable the encoding of typed relationships in links. In a book context for example, link relationships and reverse relations include obvious candidates such as next, previous, parent, child, table of contents, bibliography, glossary and index. -

Using hypertext links as interaction controls is the modern dynamic manifestation of cross references between textual commentary and illustrations in books, a mechanism that dates from the 1500s (Kilgour 1998). Hypertext links can be viewed as state transition controls in distributed collections of web-based resources; this design philosophy is known as Representational State Transfer(REST). See (Wilde and Pautasso 2011).

-

Mosaic was developed in Joseph Hardin’s lab at the National Center for Supercomputing Applications(NCSA), hosted by the University of Illinois, at Urbana/Champaign by Marc Andreesen, Eric Bina and a team of student programmers. Mosaic was initially developed on the Unix X Window System. See

http://www.ncsa.illinois.edu/Projects/mosaic.html. -

Reachability is determined by calculating the transitive closure of the link matrix. A classic and well written explanation is (Agrawal, Borgida, and Jagadish 1989).

-

Eugene Garfield developed many of the techniques for studying scientific citation and he has been called the “grandfather of Google” (

http://blog.lib.uiowa.edu/hardinmd/2010/07/12/eugene-garfield-librarian-grandfather-of-google/) because of Google’s use of citation patterns to determine relevance. See (Garfield, Cronin, and Atkins 2000) for a set of papers that review Garfield’s many contributions. See (Bar-Ilan 2008) and (Neuhaus and Daniel 2008) for recent reviews of data sources and citation metrics. -

Shepard first put adhesive stickers into case books, then published lists of cases and their citations. Shepardizing is a big business for Lexis/Nexis and Westlaw (where the technique is called “KeyCite”).

-

See

http://altmetrics.org/manifesto/for the original call for altmetrics. Altmetric.com and Plum Analytics are firms that provide altmetrics to authors, publishers, and academic institutions. In 2016 the National Information Standards Organization sought to standardize the definition and use cases for altmetrics, which should benefit everyone who cares about them. See alsohttp://www.niso.org/topics/tl/altmetrics_initiative/ -

See (Watts 2004) for a detailed review of the theoretical foundations. See (Wu 2012) for applications in web-based social networks.